By Steve Sailer

07/26/2022

Earlier: The Coming Butlerian Jihad Against “Racist“ AI

DALL-E is the usually impressive artificial intelligence-drive image generator where you type in a prompt and it returns a number of pictures, usually extraordinarily on target.

But not when you get yourself entangled with DALL-E’s hand-jiggered attempts to provide woke levels of diversity. On July 18, Open AI announced that DALL-E would now deliver more politically correct images.

Reducing Bias and Improving Safety in DALL·E 2

July 18, 2022Today, we are implementing a new technique so that DALL·E generates images of people that more accurately reflect the diversity of the world’s population. This technique is applied at the system level when DALL·E is given a prompt describing a person that does not specify race or gender, like “firefighter.”

I don’t have access to DALL-E, but I wonder how many svelte model girls you now get from the prompt “A portrait of a heroic firefighter who died in the World Trade Center on 9/11“?

Apparently, DALL-E is now covertly adding words to some of your prompts, like putting a secret “woman” or “black” or “Asian” or “Asian woman” before a certain percentage of your prompts mentioning “firefighter.“

Sounds simple, but why don’t all AI systems put a thumb on the scale like this? Perhaps they will in the near future, but adding Diversity messes up a fraction of the results.

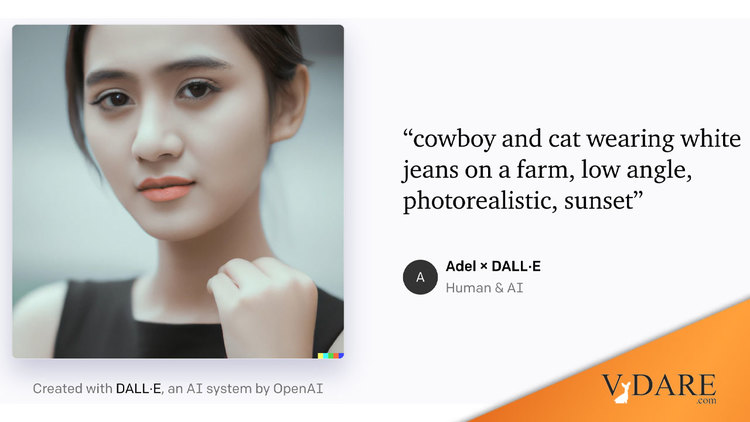

Thus, a prompt of “cowboy and cat wearing white jeans on a farm, low angle, photorealistic, sunset” blows up completely and brings you what looks like a Korean actress against a neutral background.

On Reddit, the amazing gwern explains why failures like this are often the result of Open AI’s “diversity filter.” in which it starts secretly adding words to your prompt so that pictures of cowboys don’t always look like the Marlboro Man, and how that can drive the whole system haywire. gwern notes that changing your prompt from “cowboy” to “cowgirl” fixes things:

Although the jeans come out blue. Perhaps the word “white” induces a nervous breakdown in DALL-E?

The diversity doodads seem to break the system the more extra requirements you add to your prompt.

gwern sums up:

The exact same prompt works perfectly when “cowgirl” is used, which by OA’s explanation of the diversity filter means that the diversity stuff wouldn’t be applied, fails utterly with “cowboy”, where diversity tokens may be applied, and fails intermediately with a shorter prompt where none of the words (besides ‘cowboy’) appears to be uniquely responsible. My explanation is that at long prompts, when the diversity filter is triggered, the extra tokens or processing overload the embeddings and cause them to go haywire, producing things nothing like intended. (I reported all of the error cases, but I doubt it will help given that that it’s the diversity filter.) Overall messing with these, there seems to be a clear ‘mode switch’ where it turns on and off, with a varying range of effects when ‘on’; in retrospect, this makes a lot of sense — if you ask for, say, a landscape photograph, why would any of the diversity machinery be invoked? That’s sheer waste. And the prompt-editing they seem to do would also be discrete: you wouldn’t inject ‘black’ or ‘woman’ into every prompt, just some of them. So of course it would have on/off behavior, and if you hit one of its failure cases, then you can ‘fix’ it by switching it off. So for DALL-E 2 users, it seems that you need to watch for completions which are broken or look worse than they should, particularly if you see landscapes, partially-blank images, or Asian women slipping in; and then you have to figure out either which word is triggering it making it think it is necessary and remove that, or what word you can add to make it think that it is unnecessary.

So I conclude: this prompt is 100% broken by OA’s “anti-bias” stuff. Well done, OA. Heckuva job. We didn’t ask for this, you won’t allow us to opt out of this or explain how it really works, it doesn’t work well, every once in a while a prompt will fail and you’ll be threatened with a ban, you won’t even give us the slightest hint when our samples are completely broken by it nor why it errored, which errors will be almost impossible to understand or debug (witness all the wrong theories on this page), nor will you give any refund for those. Nice, very nice.