By Steve Sailer

10/09/2017

From the New York Times, yet another article on a study we already discussed:Why Stanford Researchers Tried to Create a ‘Gaydar’ Machine

By HEATHER MURPHY OCT. 9, 2017

Michal Kosinski felt he had good reason to teach a machine to detect sexual orientation.

An Israeli start-up had started hawking a service that predicted terrorist proclivities based on facial analysis. Chinese companies were developing facial recognition software not only to catch known criminals — but also to help the government predict who might break the law next.

And all around Silicon Valley, where Dr. Kosinski works as a professor at Stanford Graduate School of Business, entrepreneurs were talking about faces as if they were gold waiting to be mined.

Few seemed concerned. So to call attention to the privacy risks, he decided to show that it was possible to use facial recognition analysis to detect something intimate, something “people should have full rights to keep private.”

After considering atheism, he settled on sexual orientation.

Whether he has now created “A.I. gaydar,” and whether that’s even an ethical line of inquiry, has been hotly debated over the past several weeks, ever since a draft of his study was posted online.

Presented with photos of gay men and straight men, a computer program was able to determine which of the two was gay with 81 percent accuracy, according to Dr. Kosinski and co-author Yilun Wang’s paper. …

Advocacy groups like Glaad and the Human Rights Campaign denounced the study as “junk science” that “threatens the safety and privacy of LGBTQ and non-LGBTQ people alike.”

Which one is it: “junk science” that doesn’t work or good science that poses a threat to privacy?

… Some argued that the study is just the latest example of a disturbing technology-fueled revival of physiognomy, the long discredited notion that personality traits can be revealed by measuring the size and shape of a person’s eyes, nose and face.

Nineteenth-century novelists were obsessed with physiognomy, but what did Nineteenth-century novelists know about humanity?

… At the heart of the controversy is rising concern about the potential for facial analysis to be misused and for findings about its effectiveness to be distorted.

Actually, that’s not “rising concern” in the singular, but two opposite concerns.

Basically, most gay men want to believe a bunch of different things, such as:

But because gays are a Privileged Group today, it’s considered evil for non-gays to notice this incoherence. Among gays, noticing shows poor Team Spirit if done in a venue where straights might hear about it.

… “At the very best, it’s a highly inaccurate science,” she said of promises to predict criminal behavior, intelligence and other character traits from faces. “At its very worst, this is racism by algorithm.”

Dr. Kosinski and Mr. Wang began by copying, or “scraping,” photos from more than 75,000 online dating profiles of men and women in the United States. …

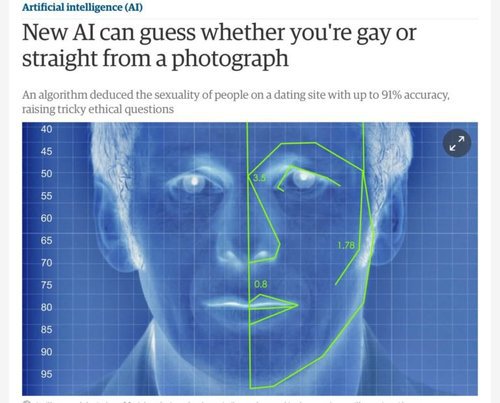

In one section of the study, the authors presented what they called “composite heterosexual faces,” left, and “composite gay faces,” right, built by averaging landmark locations of the faces classified as most and least likely to be gay. This did not sit well with some who suggested that this is just the latest example of physiognomy, rationalized by deep neural networks.

As we all know, “physiognomy” is Not Science and the fact that it works in some situations is just more proof that, scientifically speaking, You Are Bad and We Are Good.

The participants, who were procured through Amazon Mechanical Turk, a supplier for digital tasks, were advised to “use the best of your intuition.” They made the correct selection 54 percent of the time for women and 61 percent of the time for men — slightly better than flipping a coin.

Dr. Kosinski’s algorithm, by comparison, picked correctly 71 percent for of the time for women and 81 percent for men. When the computer was given five photos for each person instead of just one, accuracy rose to 83 percent for women and 91 percent for the men.

Okay, but a lot of effort was put into having the algorithm “train” itself using practice examples. How much would human gaydar improve if given a chance to practice?

[Comment at Unz.com]Nicholas Rule, a psychology professor at the University of Toronto, also studies facial perception. Using dating profile photos as well as photos taken in a lab, he has consistently found that photos of a face provide clues to all kinds of attributes, including sexuality and social class.

“Can artificial intelligence actually tell if you’re gay from your face? It feels weird — it feels like physiognomy,” he said.

“I still personally sometimes feel uncomfortable, and I have to reconcile this as a scientist — but this is what the data shows,” said Dr. Rule, who is gay.

This is a content archive of VDARE.com, which Letitia James forced off of the Internet using lawfare.