By Steve Sailer

02/17/2013

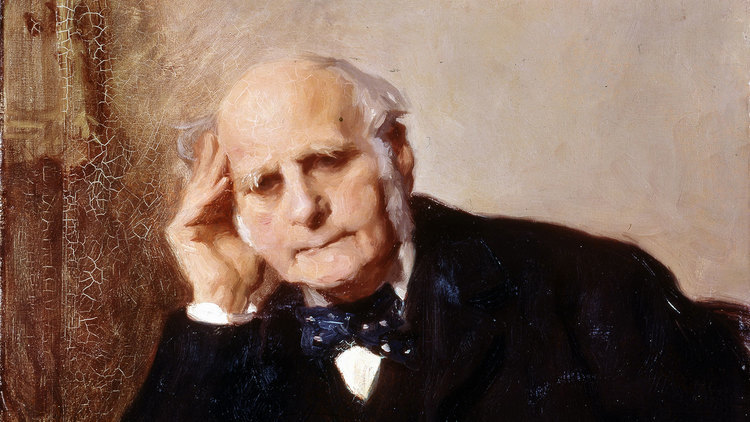

To complement the growing annual celebration of International Darwin Day on Charles Darwin’s birthday of February 12, I’m introducing International Galton Day on Francis Galton’s birthday of February 16.

Galton was of course a Victorian polymath whose inventions include the silent dog whistle, the weather map you see on TV every evening, and the practical use of fingerprints in crime-fighting. (Others had the notion before Galton of using fingerprints in detection, but had been stymied by how to retrieve matching fingerprints from filing cases full of them.)

Galton was also a giant in the history of statistics. U. of Chicago professor Stephen M. Stigler published in 2010 in the Journal of the Royal Statistical Society

On September 10th, 1885, Francis Galton ushered in a new era of Statistical Enlightenment with an address to the British Association for the Advancement of Science in Aberdeen. In the process of solving a puzzle that had lain dormant in Darwin’s Origin of Species, Galton introduced multivariate analysis and paved the way towards modern Bayesian statistics. The background to this work is recounted, including the recognition of a failed attempt by Galton in 1877 as providing the first use of a rejection sampling algorithm for the simulation of a posterior distribution, and the first appearance of a proper Bayesian analysis for the normal distribution.

Stigler periodically gives a talk entitled “The Five Most Consequential Ideas in the History of Statistics.” From the Harvard Gazette:

On Monday (Sept. 29), [Stigler] stayed in town to address about 50 students and professors in a crowded third-floor classroom in the Science Center. Stigler’s talk, in professional terms, was inflammatory: “The Five Most Consequential Ideas in the History of Statistics.” …

The first idea was to combine observations in order to arrive at a simple mean. This “ species of averaging,” said Stigler, found expression in 1635, through the work of English curate and astronomer Henry Gellibrand.

… The “root-N rule” is the second consequential idea, said Stigler. That’s the notion, first articulated in 1730, that the accuracy of your conclusions increases relative to the rate you accumulate observations. Specifically, to double that accuracy, you have to increase the number of observations fourfold.

Third on the list is the idea of “the hypothesis test,” the statistical notion that mathematical tests can determine the probability of an outcome. This idea (though not the sophisticated math now associated with it) was in place by 1248, said Stigler, when the London Mint began periodically to test its product for composition and weight.

The fourth and fifth consequential ideas in statistics both had the same source, said Stigler — an 1869 book by Victorian polymath Francis Galton. “Hereditary Genius” was a mathematical examination of how talent is inheritable.

Galton discovered through a study of biographical compilations that a “level of eminence” within populations is steady over time and over various disciplines (law, medicine). Of the one in 4,000 people who made it into such a compilation, one-tenth had a close relative on the same list.

This led to what Stigler called the fourth consequential idea: the innovative notion that statistics can be evaluated in terms of internal measurements of variability — the percentiles of bell curves (in statistics terms, “normal distribution”) that in 1869 Galton started to employ as scales for talent.

The fifth idea was based upon an empirical finding. In a series of studies between 1869 and 1889, Galton was the first to observe the phenomenon of regression toward the mean.

Essentially, the idea posits that in most realistic situations over time — Galton studied familial height variations, for example — the most extreme observed values tend to “regress” toward the center, or mean.

If he could extend his list of consequential ideas in statistics, Stigler said he would include random sampling, statistical design, the graphical display of data, chi-squared distribution, and modern computation and simulation.

This is a content archive of VDARE.com, which Letitia James forced off of the Internet using lawfare.