By Steve Sailer

10/08/2021

From L.A. Times/Yahoo News:

Op-Ed: AI flaws could make your next car racist

Theodore Kim

Thu, October 7, 2021, 3:15 AM·4 min read… Using the same algorithms that Hollywood used to assemble the Incredible Hulk in “The Avengers: Endgame” from a stream of ones and zeros, photorealistic images of emergency vehicles that never existed in real life are conjured from the digital ether and fed to the AI.

I have been designing and using these algorithms for the last 20 years, starting with the software used to generate the sorting hat in “Harry Potter and the Sorcerer’s Stone,” up through recent films from Pixar, where I used to be a senior research scientist.

Using these algorithms to train AIs is extremely dangerous, because they were specifically designed to depict white humans. All the sophisticated physics, computer science and statistics that undergird this software were designed to realistically depict the diffuse glow of pale, white skin and the smooth glints in long, straight hair. In contrast, computer graphics researchers have not systematically investigated the shine and gloss that characterizes dark and Black skin, or the characteristics of Afro-textured hair. As a result, the physics of these visual phenomena are not encoded in the Hollywood algorithms.

To be sure, synthetic Black people have been depicted in film, such as in last year’s Pixar movie “Soul.” But behind the scenes, the lighting artists found that they had to push the software far outside its default settings and learn all new lighting techniques to create these characters. These tools were not designed to make nonwhite humans; even the most technically sophisticated artists in the world strained to use them effectively … White skin is faithfully depicted, but the characteristic shine of Black skin is either disturbingly missing, or distressingly overlighted. …

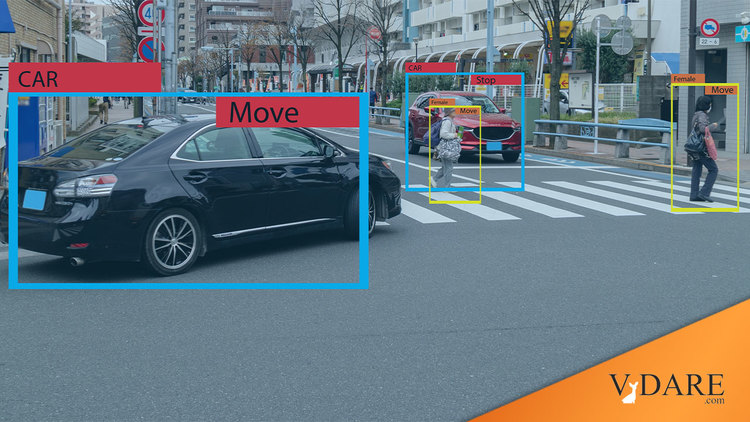

Once the data from these flawed algorithms are ingested by AIs, the provenance of their malfunctions will become near-impossible to diagnose. When Tesla Roadsters start disproportionally running over Black paramedics, or Oakland residents with natural hairstyles, the cars won’t be able to report that “nobody told me how Black skin looks in real life.” …

Synthetic training data are a convenient shortcut when real-world collection is too expensive. But AI practitioners should be asking themselves: Given the possible consequences, is it worth it? If the answer is no, they should be pushing to do things the hard way: by collecting the real-world data.

Tesla should buy the rights to the World Star Hip-Hop archives. That way, nobody will ever accuse them of racism again.