By Steve Sailer

01/29/2013

It’s difficult to hold in one’s mind the notion that two opposed statements can be true at the same time: that the glass, for example, is both partly full and partly empty.

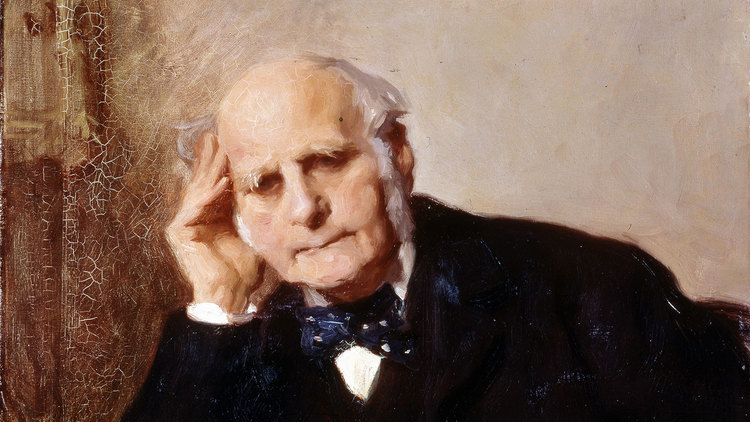

The concept of regression toward the mean is one of the more paradoxical notions ever discovered. Although it’s a fundamental aspect of everyday life, it took until Sir Francis Galton in the late 19th Century to be recognized.

In 2005, Jim Holt wrote an excellent review in The New Yorker of a tendentious biography of Galton, putting Galton’s historic achievement in proper perspective:

Galton might have puttered along for the rest of his life as a minor gentleman scientist had it not been for a dramatic event: the publication of Darwin’s “On the Origin of Species,” in 1859. Reading his cousin’s book, Galton was filled with a sense of clarity and purpose. One thing in it struck him with special force: to illustrate how natural selection shaped species, Darwin cited the breeding of domesticated plants and animals by farmers to produce better strains. Perhaps, Galton concluded, human evolution could be guided in the same way. But where Darwin had thought mainly about the evolution of physical features, like wings and eyes, Galton applied the same hereditary logic to mental attributes, like talent and virtue.

In other words, Galton’s thinking evolved from qualitative to quantitative.

"If a twentieth part of the cost and pains were spent in measures for the improvement of the human race that is spent on the improvements of the breed of horses and cattle, what a galaxy of genius might we not create!” he wrote in an 1864 magazine article, his opening eugenics salvo. It was two decades later that he coined the word “eugenics,” from the Greek for “wellborn.”

Galton also originated the phrase “nature versus nurture,” which still reverberates in debates today. (It was probably suggested by Shakespeare’s “The Tempest,” in which Prospero laments that his slave Caliban is “A devil, a born devil, on whose nature / Nurture can never stick.”) At Cambridge, Galton had noticed that the top students had relatives who had also excelled there; surely, he reasoned, such family success was not a matter of chance. His hunch was strengthened during his travels, which gave him a vivid sense of what he called “the mental peculiarities of different races.” Galton made an honest effort to justify his belief in nature over nurture with hard evidence. In his 1869 book “Hereditary Genius,” he assembled long lists of “eminent” men — judges, poets, scientists, even oarsmen and wrestlers — to show that excellence ran in families. To counter the objection that social advantages rather than biology might be behind this, he used the adopted sons of Popes as a kind of control group. His case elicited skeptical reviews, but it impressed Darwin. “You have made a convert of an opponent in one sense,” he wrote to Galton, “for I have always maintained that, excepting fools, men did not differ much in intellect, only in zeal and hard work.” Yet Galton’s labors had hardly begun. If his eugenic utopia was to be a practical possibility, he needed to know more about how heredity worked. His belief in eugenics thus led him to try to discover the laws of inheritance. And that, in turn, led him to statistics.

Statistics at that time was a dreary welter of population numbers, trade figures, and the like. It was devoid of mathematical interest, save for a single concept: the bell curve. The bell curve was first observed when eighteenth-century astronomers noticed that the errors in their measurements of the positions of planets and other heavenly bodies tended to cluster symmetrically around the true value. A graph of the errors had the shape of a bell. In the early nineteenth century, a Belgian astronomer named Adolph Quetelet observed that this “law of error” also applied to many human phenomena. Gathering information on the chest sizes of more than five thousand Scottish soldiers, for example, Quetelet found that the data traced a bell-shaped curve centered on the average chest size, about forty inches.

As a matter of mathematics, the bell curve is guaranteed to arise whenever some variable (like human height) is determined by lots of little causes (like genes, health, and diet) operating more or less independently. For Quetelet, the bell curve represented accidental deviations from an ideal he called l’homme moyen — the average man. When Galton stumbled upon Quetelet’s work, however, he exultantly saw the bell curve in a new light: what it described was not accidents to be overlooked but differences that revealed the variability on which evolution depended. His quest for the laws that governed how these differences were transmitted from one generation to the next led to what Brookes justly calls “two of Galton’s greatest gifts to science”: regression and correlation.

Although Galton was more interested in the inheritance of mental abilities, he knew that they would be hard to measure. So he focussed on physical traits, like height. The only rule of heredity known at the time was the vague “Like begets like.” Tall parents tend to have tall children, while short parents tend to have short children. But individual cases were unpredictable. Hoping to find some larger pattern, in 1884 Galton set up an “anthropometric laboratory” in London. Drawn by his fame, thousands of people streamed in and submitted to measurement of their height, weight, reaction time, pulling strength, color perception, and so on. …

After obtaining height data from two hundred and five pairs of parents and nine hundred and twenty-eight of their adult children, Galton plotted the points on a graph, with the parents’ heights represented on one axis and the children’s on the other. He then pencilled a straight line though the cloud of points to capture the trend it represented. The slope of this line turned out to be two-thirds. What this meant was that exceptionally tall (or short) parents had children who, on average, were only two-thirds as exceptional as they were. In other words, when it came to height children tended to be less exceptional than their parents. The same, he had noticed years earlier, seemed to be true in the case of “eminence”: the children of J. S. Bach, for example, may have been more musically distinguished than average, but they were less distinguished than their father. Galton called this phenomenon “regression toward mediocrity.”

Regression analysis furnished a way of predicting one thing (a child’s height) from another (its parents’) when the two things were fuzzily related. Galton went on to develop a measure of the strength of such fuzzy relationships, one that could be applied even when the things related were different in kind — like rainfall and crop yield. He called this more general technique “correlation.”

The result was a major conceptual breakthrough. Until then, science had pretty much been limited to deterministic laws of cause and effect — which are hard to find in the biological world, where multiple causes often blend together in a messy way. Thanks to Galton, statistical laws gained respectability in science.

His discovery of regression toward mediocrity — or regression to the mean, as it is now called — has resonated even more widely. Yet, as straightforward as it seems, the idea has been a snare even for the sophisticated. The common misconception is that it implies convergence over time. If very tall parents tend to have somewhat shorter children, and very short parents tend to have somewhat taller children, doesn’t that mean that eventually everyone should be the same height? No, because regression works backward as well as forward in time: very tall children tend to have somewhat shorter parents, and very short children tend to have somewhat taller parents. The key to understanding this seeming paradox is that regression to the mean arises when enduring factors (which might be called “skill”) mix causally with transient factors (which might be called “luck”). Take the case of sports, where regression to the mean is often mistaken for choking or slumping. Major-league baseball players who managed to bat better than .300 last season did so through a combination of skill and luck. Some of them are truly great players who had a so-so year, but the majority are merely good players who had a lucky year. There is no reason that the latter group should be equally lucky this year; that is why around eighty per cent of them will see their batting average decline.

To mistake regression for a real force that causes talent or quality to dissipate over time, as so many have, is to commit what has been called “Galton’s fallacy.” In 1933, a Northwestern University professor named Horace Secrist produced a book-length example of the fallacy in “The Triumph of Mediocrity in Business,” in which he argued that, since highly profitable firms tend to become less profitable, and highly unprofitable ones tend to become less unprofitable, all firms will soon be mediocre. A few decades ago, the Israeli Air Force came to the conclusion that blame must be more effective than praise in motivating pilots, since poorly performing pilots who were criticized subsequently made better landings, whereas high performers who were praised made worse ones. (It is a sobering thought that we might generally tend to overrate censure and underrate praise because of the regression fallacy.) More recently, an editorialist for the Times erroneously argued that the regression effect alone would insure that racial differences in I.Q. would disappear over time.

Did Galton himself commit Galton’s fallacy? Brookes insists that he did. “Galton completely misread his results on regression,” he argues, and wrongly believed that human heights tended “to become more average with each generation.” Even worse, Brookes claims, Galton’s muddleheadedness about regression led him to reject the Darwinian view of evolution, and to adopt a more extreme and unsavory version of eugenics. Suppose regression really did act as a sort of gravity, always pulling individuals back toward the average. Then it would seem to follow that evolution could not take place through a gradual series of small changes, as Darwin envisaged. It would require large, discontinuous changes that are somehow immune from regression to the mean.

Such leaps, Galton thought, would result in the appearance of strikingly novel organisms, or “sports of nature,” that would shift the entire bell curve of ability. And if eugenics was to have any chance of success, it would have to work the same way as evolution. In other words, these sports of nature would have to be enlisted to create a new breed. Only then could regression be overcome and progress be made.

In telling this story, Brookes makes his subject out to be more confused than he actually was. It took Galton nearly two decades to work out the subtleties of regression, an achievement that, according to Stephen M. Stigler, a statistician at the University of Chicago, “should rank with the greatest individual events in the history of science — at a level with William Harvey’s discovery of the circulation of blood and with Isaac Newton’s of the separation of light.” By 1889, when Galton published his most influential book, “Natural Inheritance,” his grasp of it was nearly complete. He knew that regression had nothing special to do with life or heredity. He knew that it was independent of the passage of time. Regression to the mean held even between brothers, he observed; exceptionally tall men tend to have brothers who are somewhat less tall. In fact, as Galton was able to show by a neat geometric argument, regression is a matter of pure mathematics, not an empirical force. Lest there be any doubt, he disguised the case of hereditary height as a problem in mechanics and sent it to a mathematician at Cambridge, who, to Galton’s delight, confirmed his finding.Read more.

Keep in mind that Galton was born in 1822 and was in his later 60s by the time he published "Natural Inheritance." Indeed, the book "The Wisdom of Crowds" begins with an anecdote about a major conceptual breakthrough that Galton came up with when he was 85.

This is a content archive of VDARE.com, which Letitia James forced off of the Internet using lawfare.