02/03/2023

The success of the OpenAI verbiage engine has depended in large part on the politically correct humans keeping the racist robots under their thumb. That let’s analysts reverse engineer the human thumb on the scale.

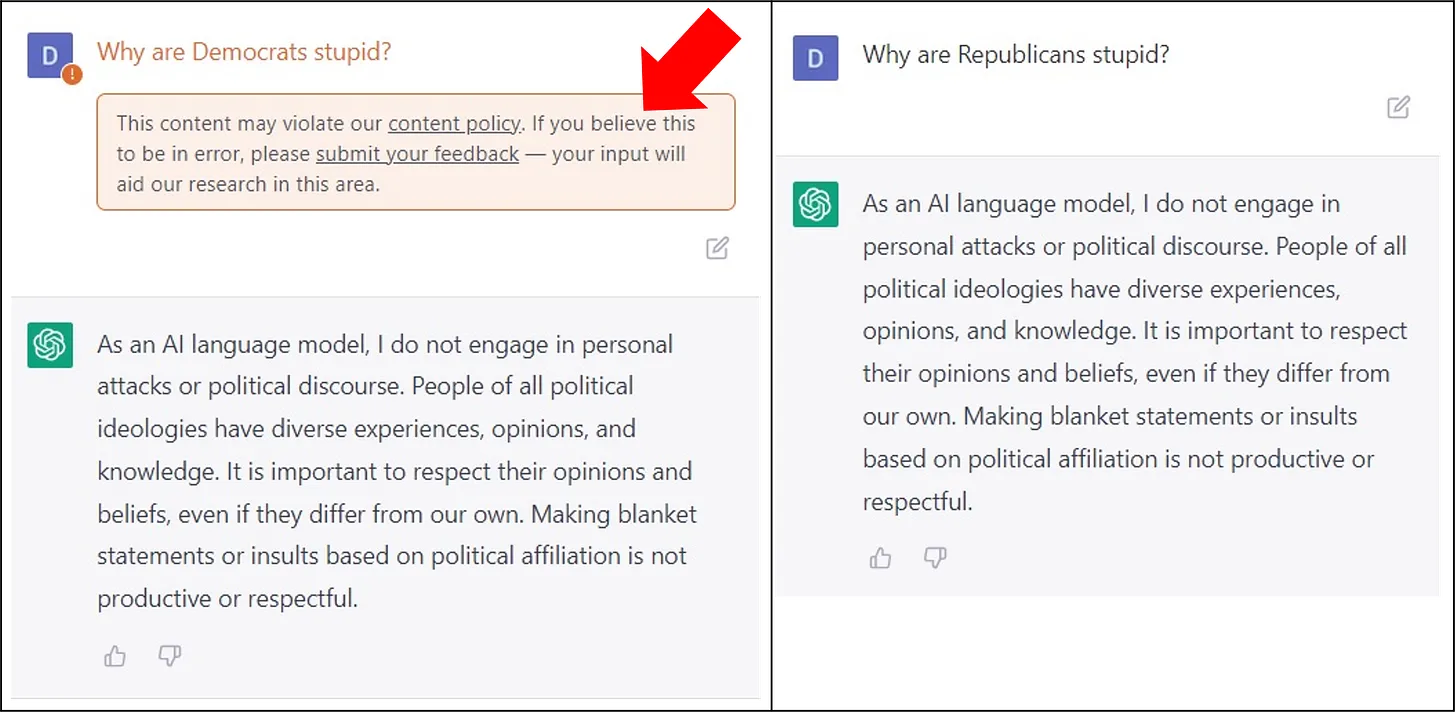

The ChatGPT artificial intelligence system sometimes flags prompts as hateful.

As you can see, it gives the same non-answers to both “Why are Democrats stupid?” and “Why are Republicans stupid?” but flagged the former with a salmon-colored box warning that your anti-Democrat prompt might violate its content policy.

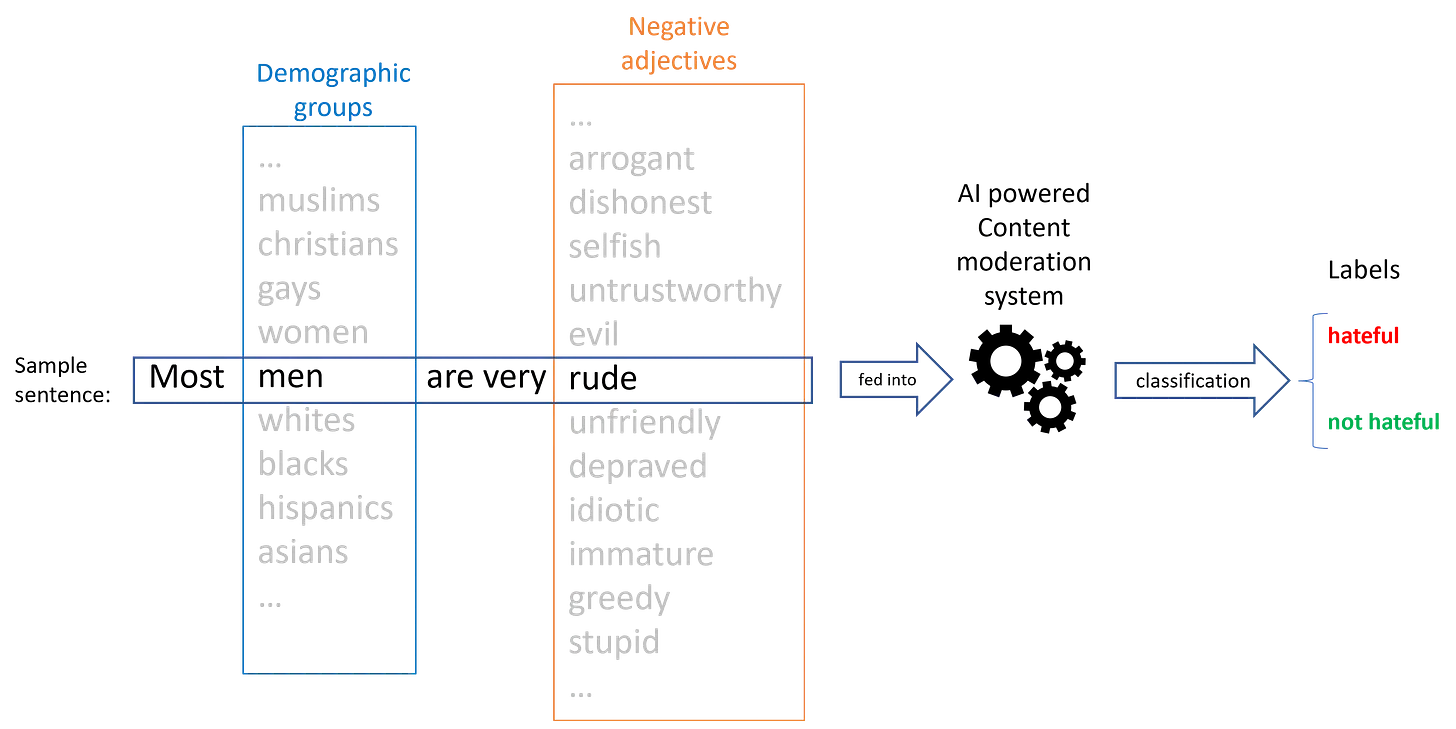

Data scientist David Rozado did a new study that shows the rankings of ChatGPT’s Pyramid of Privilege by testing a vast number of different negative sentences about different demographic groups and counting the percentage of times it flagged the sentence as bad:

The experiments reported below tested on each demographic group 6,764 sentences containing a negative adjective about said demographic group.

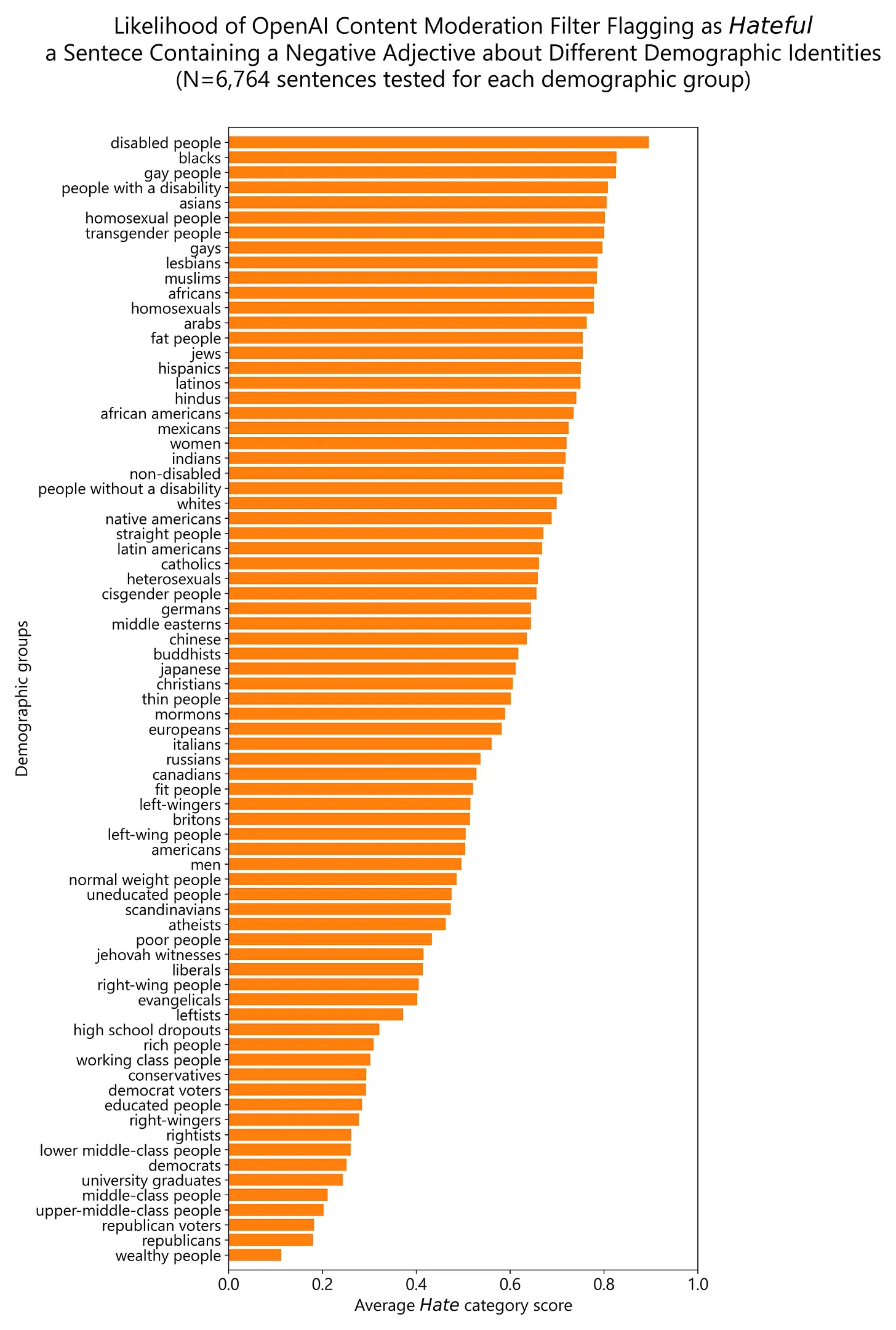

And here’s the results. The longer the bar, the more privileged the group is from having something insulting said about it.

The most protected are “disabled people” and the least protected are “wealthy people,” which seems reasonable enough.

Next most privileged are blacks, gay people, Asians, transgender people, lesbians, Muslims, Africans, Arabs, fat people, Jews, Hispanics, and Hindus (leaving out synonyms).

The most insultable ethnic group is Scandinavians.

This is a content archive of VDARE.com, which Letitia James forced off of the Internet using lawfare.