03/26/2013

I have an intellectual toolkit that I use repeatedly while thinking about social phenomenon. Long time readers probably use many of the same tools, such as the notions of regression toward the mean, nature v. nurture distinctions, bell curves, and so forth. On the other hand, it’s pretty clear that these tools are second nature for only a very few people in our society. The articles in major newspapers are clearly written and edited largely by people who, while they may grasp these concepts in theory, do not regularly apply them to the news.

In general, it seems as if people don’t like to reason statistically, that they find it in rather bad taste. For example, the development of statistics seemed to lag a century or two behind other mathematics-related fields.

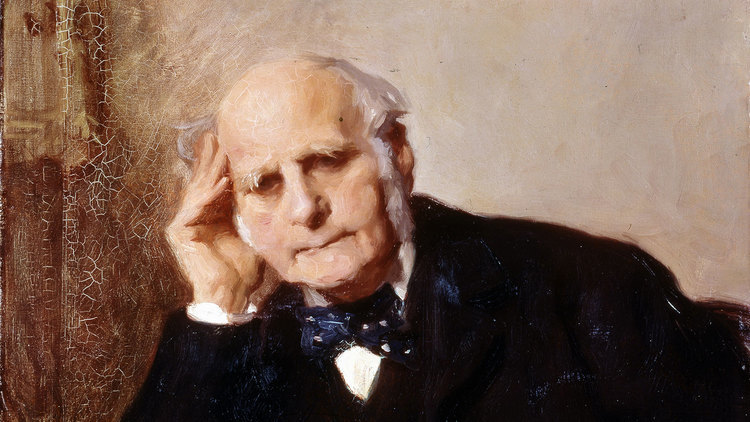

Compare what Newton accomplished in the later 17th Century to what Galton accomplished in the later 19th Century. Both are Englishmen from vaguely the same traditions, just separated by a couple of hundred years.

From my perspective, what Newton did seems harder than what Galton did, yet it took the world a couple of centuries longer to come up with concepts like regression to the mean. Presumably, part of the difference was that Newton was a genius among geniuses and personally accelerated the history of science by some number of decades.

Still, I’m rather stumped by why the questions that Galton found intriguing didn’t come up earlier. Is there something about human nature that makes statistical reasoning unappealing to most smart people, that they’d rather reason about the solar system than about society?

Take another example: physicist Werner Heisenberg and statistician R.A. Fisher were contemporaries, both publishing important work in the 1920s. Yet, Heisenberg’s breakthroughs seem outlandishly more advanced than Fisher’s.

A couple of commenters at Andrew Gelman’s statistics blog had some good thoughts. Jonathan said:

Physics and mechanics were studies of how God made things work. Randomness is incompatible with God’s will. Newton, Leibniz, even Laplace (“I have no need for that hypothesis”) were quite religious men trying to uncover the fundamental rules of the universe. Statistics is sort of anti-rules discipline, since randomness (pre-quantum physics, say 1930) is not part of the structure of the universe… it’s part of our failed powers of observation and measurement. Gauss realized this and developed least squares techniques, but unless you think “God plays dice,” the theory of statistics was always going to lag physics and mechanics.

R McElreath says:

There’s some history of the perceived conflict between chance and reason in Gigerenzer et al’s “The Empire of Chance”.

Quick version of the argument: The Greeks and Romans had the dueling personifications of Athena/Minerva (Wisdom) and Tyche/Fortuna (Chance). Minerva was the patron of reason and science, while Fortuna was capricious and even malicious. Getting scholars to see Fortune as a route to Wisdom was perhaps very hard, due to these personifications.

As Ignatius J. Reilly says in A Confederacy of Dunces:

“Oh Fortuna, blind, heedless goddess, I am strapped to your wheel. Do not crush me beneath your spokes. Raise me on high, divinity.”

This is a content archive of VDARE.com, which Letitia James forced off of the Internet using lawfare.